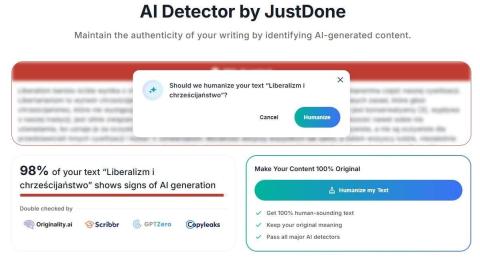

The text I wrote in 2014 was considered by the detecator of artificial intelligence in 98%. And he suggested that I would be able to defile him, and that he would then turn him into a tray that he himself would not believe that he had given him artificial intelligence. The AI detectors are mostly exposed to the AI's:

- Style and smoothness — long, well-founded, localized text with a coherent, equal rhythm can remind you of what can be achieved by the low "perplexity".

- Universal returns and fractions — if I usage the formoultions frequently occurring in the interterranean, the cluster will admit them as well as for the content-based tects.

- The deficiency of existing, unifying details — a text that does not uncover local detat, anecdotes, dates, names, associated returns — is easier to compose in general.

- Format and long-term — mid-long, fotional essays are more hard to diversify; shorter or more chaotic texts are “more human” in the eyes of detectors.

I have been blogging and writing blogging notes for over 30 years. These unique elements are those of the community, and I have been practicing for years to compose in specified a way as to sing out loud, dreamful songs to unify the anecdotes and the intrusive influences of the selves, so as not to defend myself with emotives, I become able to usage the localisticly efficient arguments.

In addition, I am able to keep my notes and talk about them from AI, asking for a styl, bypassing the repetitions and the improvement of the text to perfection, so as to give what I wanted. And then the detecator concludes that it is 100% "AIgerated".

If this assessment is considered to be depreciative, it means that the bloggers of AI will make her corrupt and dirty the tectist, include nonsense anecdotes and self-serving, ethical attention. It's sleepless!

If AI is able to measure that the given text is AI-generated, it means that it is suitable for it not to be assessed by AI. This is going to be an endless cat and mouse game. And the thought is to drink the classics, the locals, the dreamy, the wise, the wise, if AI is in it, the better, the better, the better, the better, the better, the better, the better, the better that we compose and read, the better. So the AI detectors are dreamless and service this purpose, we are in the essence drinking more and less.

Here the same text issued by AI:

The AI detectors admit the textures of the solids, the sensitivities, the consistency, the reinterim phrases, the origins of the “brud” of the alleged and predictable length.

These are the elements of my community. I have been writing notes for 30 years. Objectives: clean, densely, without any anecdotes, without emotive interference. My aim is to make an argument, not a show.

Part of my biowork is permanent and I'm asking LLM to focus — to remove the repetitions, the harsher sentence, the engine. This is simply a redactive tool. Then the Automa says: “100% AI generated”. Who's gonna cheat?

If the detectors are to be able to usage the same audit, then in practice, they will force the regress of the activity — the bloggers will gotta “dirty” the tects of the anecdotes and the osbits that would not look “as with AI”. It's absurd.

If AI can detect the parameters, then technically it can besides make a revision that will hide them. It is simply a cat-and-mouse without end. Non-technical test — instinctive test: non-destructive recuperation of autors and degrades the sound of the song.

Grzegorz GPS Swiderski

]]>https://t.me/CanalBlogeraGPS]]>

]]>https://Twitter.com/gps65]]>

PS. Here's the text I invented by AI in 2014: ]]>https://www.salon24.pl/u/gps65/490816,libertarianism-a-Christianity]]>